Featured

Spark Remove Null Rows

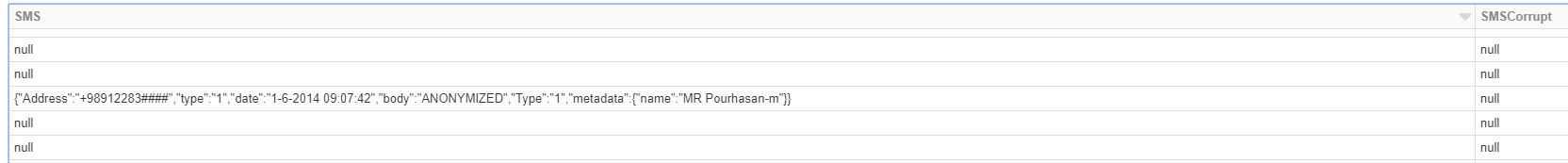

Spark Remove Null Rows. Drop rows with null values values in pyspark is accomplished by using isnotnull() function along with where condition rows with non null values are filtered using where condition as shown. Filter () function is used to filter the rows from rdd/dataframe based on the.

If you are familiar with pyspark sql, you can check is null and is not null to filter the rows from dataframe. Filter () function is used to filter the rows from rdd/dataframe based on the. Java.lang.float) val results = seq( entry(10, null, null), entry(10, null, null), entry(20, null, null) ) val df:

Drop Rows With Null Values Values In Pyspark Is Accomplished By Using Isnotnull() Function Along With Where Condition Rows With Non Null Values Are Filtered Using Where Condition As Shown.

As part of the cleanup, some times you may need to drop rows with null values in spark dataframe and filter rows by checking is null/not null dataframe api provides. Nnk apache spark duplicate rows could be remove or drop from spark sql dataframe using distinct () and dropduplicates () functions, distinct () can be used to remove. It accepts two parameters namely.

Once You Know That Rows In Your Dataframe Contains Null Values You May Want To Do Following Actions On It:

Drop rows with null values in spark. I have a dataframe which contains one struct field. In pyspark the drop () function can be used to remove null values from the dataframe.

You Won’t Be Able To Set Nullable To False For All.

Filter rows with null values in dataframe in spark, using filter () or where () functions of dataframe we can filter rows with null values by checking is null or isnull. Filter () function is used to filter the rows from rdd/dataframe based on the. Drop rows which has any.

Here We Are Going To Use The Logical Expression To Filter The Row.

Val data = seq ( row (phil, 44), row (null, 21) ) you can keep null values out of certain columns by setting nullable to false. Pyspark.sql.dataframe.fillna () function was introduced in spark version 1.3.1 and is used to replace null values with another specified value. I want to remove the values which are null from the struct field.

Scala> Val Df = Seq ( (Null, Some (2)), (Some (A), Some (4)), (Some (C), Some (5)), (Some (B), Null), (Null,Null)).Todf.

Dataframe.where (dataframe.column.isnotnull ()) python program to. The solution is shown below: Java.lang.float) val results = seq( entry(10, null, null), entry(10, null, null), entry(20, null, null) ) val df:

Popular Posts

How To Delete Odd Number Rows In Excel

- Get link

- X

- Other Apps

Comments

Post a Comment